待实例启动后,用同样的方式进行集群的构建。

root@5141467f762d:/data# redis-cli --cluster create 172.25.253.0:8000 172.25.253.0:8001 172.25.253.0:8002 172.25.253.0:8003 172.25.253.0:8004 172.25.253.0:8005 172.25.253.0:8006 172.25.253.0:8007 172.25.253.0:8008 172.25.253.0:8009 --cluster-replicas 1 -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing hash slots allocation on 10 nodes...

Master[0] -> Slots 0 - 3276

Master[1] -> Slots 3277 - 6553

Master[2] -> Slots 6554 - 9829

Master[3] -> Slots 9830 - 13106

Master[4] -> Slots 13107 - 16383

Adding replica 172.25.253.0:8006 to 172.25.253.0:8000

Adding replica 172.25.253.0:8007 to 172.25.253.0:8001

Adding replica 172.25.253.0:8008 to 172.25.253.0:8002

Adding replica 172.25.253.0:8009 to 172.25.253.0:8003

Adding replica 172.25.253.0:8005 to 172.25.253.0:8004

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: e360847d352d53946fe3d8df45eb85d345f5e03b 172.25.253.0:8000

slots:[0-3276] (3277 slots) master

M: 23a3550fdf50cb86a6d12d56627757318ea9ad67 172.25.253.0:8001

slots:[3277-6553] (3277 slots) master

M: 054e696ec5639f8bf68293f63a2d1e6507062e47 172.25.253.0:8002

slots:[6554-9829] (3276 slots) master

M: 4f9cc0b67da6a31ca9a1f596154d1d4e02932086 172.25.253.0:8003

slots:[9830-13106] (3277 slots) master

M: 708043e6b8b70953cc162b733d15be3fc951efca 172.25.253.0:8004

slots:[13107-16383] (3277 slots) master

S: c85a6dd73665472fdd76d83b78bf3093669b2c4e 172.25.253.0:8005

replicates 054e696ec5639f8bf68293f63a2d1e6507062e47

S: 478c2ad79e506106bc5471df3e545f4624c60b43 172.25.253.0:8006

replicates 23a3550fdf50cb86a6d12d56627757318ea9ad67

S: f9bad9c5b76e9609c16a503dbd9b23f4d62d5c9d 172.25.253.0:8007

replicates 708043e6b8b70953cc162b733d15be3fc951efca

S: 0c14761377ed09d955e06a8e7bcd8354494bc711 172.25.253.0:8008

replicates 4f9cc0b67da6a31ca9a1f596154d1d4e02932086

S: 236c13a695d926a2b739b90092de49a8089c30cc 172.25.253.0:8009

replicates e360847d352d53946fe3d8df45eb85d345f5e03b

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 172.25.253.0:8000)

M: e360847d352d53946fe3d8df45eb85d345f5e03b 172.25.253.0:8000

slots:[0-3276] (3277 slots) master

1 additional replica(s)

M: 23a3550fdf50cb86a6d12d56627757318ea9ad67 172.25.253.0:8001

slots:[3277-6553] (3277 slots) master

1 additional replica(s)

S: c85a6dd73665472fdd76d83b78bf3093669b2c4e 172.25.253.0:8005

slots: (0 slots) slave

replicates 054e696ec5639f8bf68293f63a2d1e6507062e47

S: f9bad9c5b76e9609c16a503dbd9b23f4d62d5c9d 172.25.253.0:8007

slots: (0 slots) slave

replicates 708043e6b8b70953cc162b733d15be3fc951efca

S: 478c2ad79e506106bc5471df3e545f4624c60b43 172.25.253.0:8006

slots: (0 slots) slave

replicates 23a3550fdf50cb86a6d12d56627757318ea9ad67

S: 0c14761377ed09d955e06a8e7bcd8354494bc711 172.25.253.0:8008

slots: (0 slots) slave

replicates 4f9cc0b67da6a31ca9a1f596154d1d4e02932086

S: 236c13a695d926a2b739b90092de49a8089c30cc 172.25.253.0:8009

slots: (0 slots) slave

replicates e360847d352d53946fe3d8df45eb85d345f5e03b

M: 708043e6b8b70953cc162b733d15be3fc951efca 172.25.253.0:8004

slots:[13107-16383] (3277 slots) master

1 additional replica(s)

M: 054e696ec5639f8bf68293f63a2d1e6507062e47 172.25.253.0:8002

slots:[6554-9829] (3276 slots) master

1 additional replica(s)

M: 4f9cc0b67da6a31ca9a1f596154d1d4e02932086 172.25.253.0:8003

slots:[9830-13106] (3277 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

测试数据写入

我们需要给源集群写入测试数据,然后才能进行数据迁移。数据写入脚本如下:

for i in `seq 1000000`;

do

redis-cli -c -h 127.0.0.1 -p 7000 -a 123456 set k:$i v:$i

done

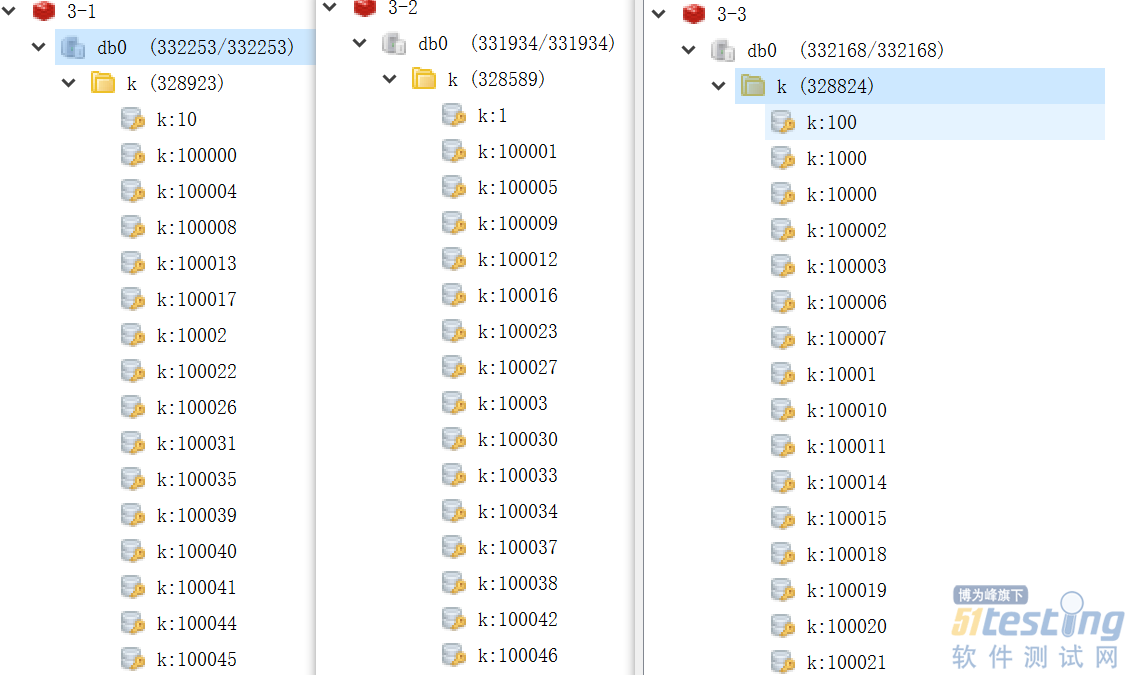

可以看出,上面的脚本共写入了1000000条数据。Redis集群会根据key的hash进行数据分区,如下:

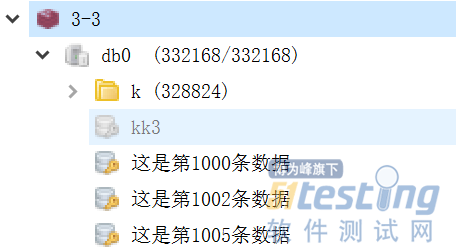

此外,为了测试数据过滤功能,还额外写入了10W条其他key,如下:

Redis-Shake测试

首先,在github上下载最新的redis-shake-v2.0.3.tar.gz并解压。

/mnt/c/Users/wangy ? tar -xvf redis-shake-v2.0.3.tar.gz

./._redis-shake-v2.0.3

./redis-shake-v2.0.3/

./redis-shake-v2.0.3/._.DS_Store

./redis-shake-v2.0.3/.DS_Store

./redis-shake-v2.0.3/ChangeLog

./redis-shake-v2.0.3/redis-shake.linux

./redis-shake-v2.0.3/redis-shake.conf

./redis-shake-v2.0.3/redis-shake.windows

./redis-shake-v2.0.3/._stop.sh

./redis-shake-v2.0.3/stop.sh

./redis-shake-v2.0.3/._start.sh

./redis-shake-v2.0.3/start.sh

./redis-shake-v2.0.3/redis-shake.darwin

打开其中的redis-shake.conf进行修改,下面仅列出与本次测试相关的配置项。

# 源端redis的类型,支持standalone,sentinel,cluster和proxy四种模式,注意:目前proxy只用于rump模式。

source.type = cluster

# 源redis地址。

source.address = 172.25.253.0:7002;172.25.253.0:7000;172.25.253.0:7001;172.25.253.0:7003;172.25.253.0:7040;172.25.253.0:7005

# password of db/proxy. even if type is sentinel.

source.password_raw = 123456

# auth type, don't modify it

source.auth_type = auth

# 目的redis的类型,支持standalone,sentinel,cluster和proxy四种模式。

target.type = cluster

target.address = 172.25.253.0:8000;172.25.253.0:8001;172.25.253.0:8002;172.25.253.0:8003;172.25.253.0:8004

# password of db/proxy. even if type is sentinel.

target.password_raw = 123456

# auth type, don't modify it

target.auth_type = auth

# 支持按前缀过滤key,只让指定前缀的key通过,分号分隔。比如指定abc,将会通过abc, abc1, abcxxx

filter.key.whitelist = k:

配置好之后,使用下面的命令启动:

/mnt/c/Users/wangy/redis-shake-v2.0.3 ? ./redis-shake.linux -type=sync -conf=redis-shake.conf

2021/03/20 15:55:10 [WARN] source.auth_type[auth] != auth

2021/03/20 15:55:10 [WARN] target.auth_type[auth] != auth

2021/03/20 15:55:10 [INFO] the target redis type is cluster, only pass db0

2021/03/20 15:55:10 [INFO] source rdb[172.25.253.0:7002] checksum[yes]

2021/03/20 15:55:10 [INFO] source rdb[172.25.253.0:7000] checksum[yes]

2021/03/20 15:55:10 [INFO] source rdb[172.25.253.0:7001] checksum[yes]

2021/03/20 15:55:10 [WARN]

______________________________

\ \ _ ______ |

\ \ / \___-=O'/|O'/__|

\ RedisShake, here we go !! \_______\ / | / )

/ / '/-==__ _/__|/__=-| -GM

/ Alibaba Cloud / * \ | |

/ / (o)

------------------------------

if you have any problem, please visit https://github.com/alibaba/RedisShake/wiki/FAQ

2021/03/20 15:55:10 [INFO] redis-shake configuration: {"ConfVersion":1,"Id":"redis-shake","LogFile":"","LogLevel":"info","SystemProfile":9310,"HttpProfile":9320,"Parallel":32,"SourceType":"cluster","SourceAddress":"172.25.253.0:7002;172.25.253.0:7000;172.25.253.0:7001","SourcePasswordRaw":"***","SourcePasswordEncoding":"***","SourceAuthType":"auth","SourceTLSEnable":false,"SourceRdbInput":["local"],"SourceRdbParallel":3,"SourceRdbSpecialCloud":"","TargetAddress":"172.25.253.0:8000;172.25.253.0:8001;172.25.253.0:8002;172.25.253.0:8003;172.25.253.0:8004","TargetPasswordRaw":"***","TargetPasswordEncoding":"***","TargetDBString":"-1","TargetAuthType":"auth","TargetType":"cluster","TargetTLSEnable":false,"TargetRdbOutput":"local_dump","TargetVersion":"5.0.12","FakeTime":"","KeyExists":"none","FilterDBWhitelist":["0"],"FilterDBBlacklist":[],"FilterKeyWhitelist":[],"FilterKeyBlacklist":[],"FilterSlot":[],"FilterLua":false,"BigKeyThreshold":524288000,"Metric":true,"MetricPrintLog":false,"SenderSize":104857600,"SenderCount":4095,"SenderDelayChannelSize":65535,"KeepAlive":0,"PidPath":"","ScanKeyNumber":50,"ScanSpecialCloud":"","ScanKeyFile":"","Qps":200000,"ResumeFromBreakPoint":false,"Psync":true,"NCpu":0,"HeartbeatUrl":"","HeartbeatInterval":10,"HeartbeatExternal":"","HeartbeatNetworkInterface":"","ReplaceHashTag":false,"ExtraInfo":false,"SockFileName":"","SockFileSize":0,"FilterKey":null,"FilterDB":"","Rewrite":false,"SourceAddressList":["172.25.253.0:7002","172.25.253.0:7000","172.25.253.0:7001"],"TargetAddressList":["172.25.253.0:8000","172.25.253.0:8001","172.25.253.0:8002","172.25.253.0:8003","172.25.253.0:8004"],"SourceVersion":"5.0.12","HeartbeatIp":"127.0.0.1","ShiftTime":0,"TargetReplace":true,"TargetDB":-1,"Version":"develop,d7cb0297f121e2f441d3636ef363871aa2ab5e25,go1.14.4,2020-07-24_17:19:07","Type":"sync"}

2021/03/20 15:55:10 [INFO] DbSyncer[0] starts syncing data from 172.25.253.0:7002 to [172.25.253.0:8000 172.25.253.0:8001 172.25.253.0:8002 172.25.253.0:8003 172.25.253.0:8004] with http[9323], enableResumeFromBreakPoint[false], slot boundary[-1, -1]

2021/03/20 15:55:10 [INFO] DbSyncer[2] starts syncing data from 172.25.253.0:7001 to [172.25.253.0:8000 172.25.253.0:8001 172.25.253.0:8002 172.25.253.0:8003 172.25.253.0:8004] with http[9323], enableResumeFromBreakPoint[false], slot boundary[-1, -1]

2021/03/20 15:55:10 [INFO] DbSyncer[1] starts syncing data from 172.25.253.0:7000 to [172.25.253.0:8000 172.25.253.0:8001 172.25.253.0:8002 172.25.253.0:8003 172.25.253.0:8004] with http[9323], enableResumeFromBreakPoint[false], slot boundary[-1, -1]

2021/03/20 15:55:10 [INFO] DbSyncer[1] psync connect '172.25.253.0:7000' with auth type[auth] OK!

2021/03/20 15:55:10 [INFO] DbSyncer[0] psync connect '172.25.253.0:7002' with auth type[auth] OK!

2021/03/20 15:55:10 [INFO] DbSyncer[2] psync connect '172.25.253.0:7001' with auth type[auth] OK!

2021/03/20 15:55:10 [INFO] DbSyncer[1] psync send listening port[9320] OK!

2021/03/20 15:55:10 [INFO] DbSyncer[2] psync send listening port[9320] OK!

2021/03/20 15:55:10 [INFO] DbSyncer[0] psync send listening port[9320] OK!

2021/03/20 15:55:10 [INFO] DbSyncer[1] try to send 'psync' command: run-id[?], offset[-1]

2021/03/20 15:55:10 [INFO] DbSyncer[2] try to send 'psync' command: run-id[?], offset[-1]

2021/03/20 15:55:10 [INFO] DbSyncer[0] try to send 'psync' command: run-id[?], offset[-1]

2021/03/20 15:55:10 [INFO] Event:FullSyncStart Id:redis-shake

2021/03/20 15:55:10 [INFO] DbSyncer[1] psync runid = 4a2a6e97a05ea7c3ade676209efea6bab61638bc, offset = 444709, fullsync

2021/03/20 15:55:10 [INFO] Event:FullSyncStart Id:redis-shake

2021/03/20 15:55:10 [INFO] DbSyncer[0] psync runid = 3889e6f11bb538d4c21fcd148e9c35715c316a90, offset = 355726, fullsync

2021/03/20 15:55:10 [INFO] Event:FullSyncStart Id:redis-shake

2021/03/20 15:55:10 [INFO] DbSyncer[2] psync runid = 8be6cbd6f67e8b66867e8ca8a3e9cba755c84008, offset = 444493, fullsync

2021/03/20 15:55:11 [INFO] DbSyncer[0] rdb file size = 200163

2021/03/20 15:55:11 [INFO] Aux information key:redis-ver value:5.0.12

2021/03/20 15:55:11 [INFO] Aux information key:redis-bits value:64

2021/03/20 15:55:11 [INFO] Aux information key:ctime value:1616226910

2021/03/20 15:55:11 [INFO] Aux information key:used-mem value:3310920

2021/03/20 15:55:11 [INFO] Aux information key:repl-stream-db value:0

2021/03/20 15:55:11 [INFO] Aux information key:repl-id value:3889e6f11bb538d4c21fcd148e9c35715c316a90

2021/03/20 15:55:11 [INFO] Aux information key:repl-offset value:355726

2021/03/20 15:55:11 [INFO] Aux information key:aof-preamble value:0

2021/03/20 15:55:11 [INFO] db_size:3344 expire_size:0

2021/03/20 15:55:11 [INFO] DbSyncer[2] rdb file size = 223563

2021/03/20 15:55:11 [INFO] Aux information key:redis-ver value:5.0.12

2021/03/20 15:55:11 [INFO] Aux information key:redis-bits value:64

2021/03/20 15:55:11 [INFO] Aux information key:ctime value:1616226910

2021/03/20 15:55:11 [INFO] Aux information key:used-mem value:3380008

2021/03/20 15:55:11 [INFO] Aux information key:repl-stream-db value:0

2021/03/20 15:55:11 [INFO] Aux information key:repl-id value:8be6cbd6f67e8b66867e8ca8a3e9cba755c84008

2021/03/20 15:55:11 [INFO] Aux information key:repl-offset value:444493

2021/03/20 15:55:11 [INFO] Aux information key:aof-preamble value:0

2021/03/20 15:55:11 [INFO] db_size:3345 expire_size:0

2021/03/20 15:55:11 [INFO] DbSyncer[1] rdb file size = 222914

2021/03/20 15:55:11 [INFO] Aux information key:redis-ver value:5.0.12

2021/03/20 15:55:11 [INFO] Aux information key:redis-bits value:64

2021/03/20 15:55:11 [INFO] Aux information key:ctime value:1616226910

2021/03/20 15:55:11 [INFO] Aux information key:used-mem value:3375512

2021/03/20 15:55:11 [INFO] Aux information key:repl-stream-db value:0

2021/03/20 15:55:11 [INFO] Aux information key:repl-id value:4a2a6e97a05ea7c3ade676209efea6bab61638bc

2021/03/20 15:55:11 [INFO] Aux information key:repl-offset value:444709

2021/03/20 15:55:11 [INFO] Aux information key:aof-preamble value:0

2021/03/20 15:55:11 [INFO] db_size:3330 expire_size:0

2021/03/20 15:55:12 [INFO] DbSyncer[0] total = 195.47KB - 127.74KB [ 65%] entry=1005

2021/03/20 15:55:12 [INFO] DbSyncer[2] total = 218.32KB - 124.72KB [ 57%] entry=949

2021/03/20 15:55:12 [INFO] DbSyncer[1] total = 217.69KB - 151.26KB [ 69%] entry=1016

2021/03/20 15:55:13 [INFO] DbSyncer[0] total = 195.47KB - 184.66KB [ 94%] entry=2109

2021/03/20 15:55:13 [INFO] DbSyncer[2] total = 218.32KB - 217.07KB [ 99%] entry=2296

2021/03/20 15:55:13 [INFO] DbSyncer[1] total = 217.69KB - 210.68KB [ 96%] entry=2169

2021/03/20 15:55:14 [INFO] DbSyncer[0] total = 195.47KB - 195.47KB [100%] entry=3097

2021/03/20 15:55:14 [INFO] DbSyncer[2] total = 218.32KB - 218.32KB [100%] entry=3311

2021/03/20 15:55:14 [INFO] DbSyncer[2] total = 218.32KB - 218.32KB [100%] entry=3345

2021/03/20 15:55:14 [INFO] DbSyncer[2] sync rdb done

2021/03/20 15:55:14 [INFO] DbSyncer[1] total = 217.69KB - 217.69KB [100%] entry=3221

2021/03/20 15:55:14 [INFO] DbSyncer[2] FlushEvent:IncrSyncStart Id:redis-shake

2021/03/20 15:55:14 [INFO] DbSyncer[0] total = 195.47KB - 195.47KB [100%] entry=3344

2021/03/20 15:55:14 [INFO] DbSyncer[0] sync rdb done

2021/03/20 15:55:14 [INFO] DbSyncer[0] FlushEvent:IncrSyncStart Id:redis-shake

2021/03/20 15:55:14 [INFO] DbSyncer[1] total = 217.69KB - 217.69KB [100%] entry=3330

2021/03/20 15:55:14 [INFO] DbSyncer[1] sync rdb done

2021/03/20 15:55:14 [INFO] DbSyncer[1] FlushEvent:IncrSyncStart Id:redis-shake

2021/03/20 15:55:15 [INFO] DbSyncer[2] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/03/20 15:55:15 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/03/20 15:55:15 [INFO] DbSyncer[1] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/03/20 15:55:16 [INFO] DbSyncer[2] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/03/20 15:55:16 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/03/20 15:55:16 [INFO] DbSyncer[1] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/03/20 15:55:17 [INFO] DbSyncer[2] sync: +forwardCommands=1 +filterCommands=0 +writeBytes=4

...

当观察到sync rdb done,表面全量迁移完成,接下来是增量迁移。

经测试,利用工具redis-shake,可以快速对Redis集群的数据进行迁移。

问题

·网络连通性,端口需要确认。

·100W条数据,4分钟左右。

·全量/部分。

版权声明:本文出自51Testing会员投稿,51Testing软件测试网及相关内容提供者拥有内容的全部版权,未经明确的书面许可,任何人或单位不得对本网站内容复制、转载或进行镜像,否则将追究法律责任。