二. DML命令

1、加载数据

现有一张表,建表语句如下所示:

CREATE TABLE login (

uid BIGINT,

ip STRING

)

PARTITIONED BY (pt string)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

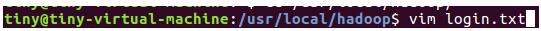

退出Hive Shell,创建login.txt

11151007001,192.168.1.1

11151007002,192.168.1.2

创建login2.txt

11151007003,192.168.1.3

11151007004,192.168.1.4

加载本地数据到Hive表(再打开Hive Shell,并且要重新USE testdb4;)

LOAD DATA LOCAL INPATH '/usr/local/hadoop/login.txt' OVERWRITE INTO TABLE login PARTITION (pt='20161221');

SELECT * FROM LOGIN;

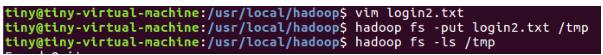

加载HDFS中的文件

LOAD DATA INPATH '/tmp/login2.txt' INTO TABLE login PARTITION (pt='20161221');

SELECT *FROM LOGIN;

2、查询结果插入到表

单表插入

CREATE TABLE login2(uid BIGINT);

INSERT OVERWRITE TABLE login2 SELECT DISTINCT uid FROM login;

多表插入

CREATE TABLE login_ip(ip STRING);

CREATE TABLE login_uid(uid BIGINT);

FROM login

INSERT OVERWRITE TABLE login_uid

SELECT uid

INSERT OVERWRITE TABLE login_ip

SELECT ip;

查询结果输出到文件系统中

FROM login

INSERT OVERWRITE LOCAL DIRECTORY '/usr/local/hadoop/login' SELECT *

INSERT OVERWRITE DIRECTORY '/tmp/ip' SELECT ip;

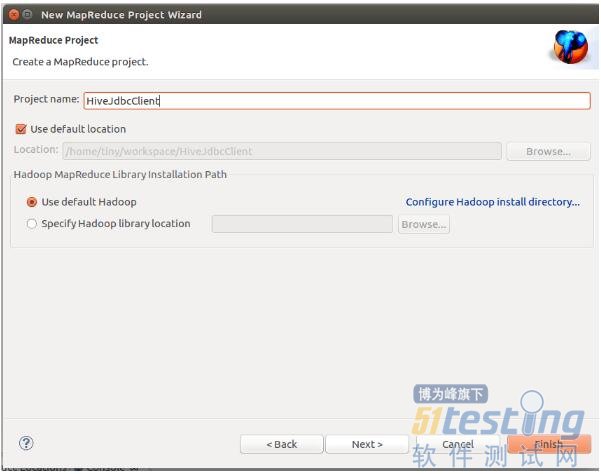

三. HiveJDBC

1、新建MapReduce项目

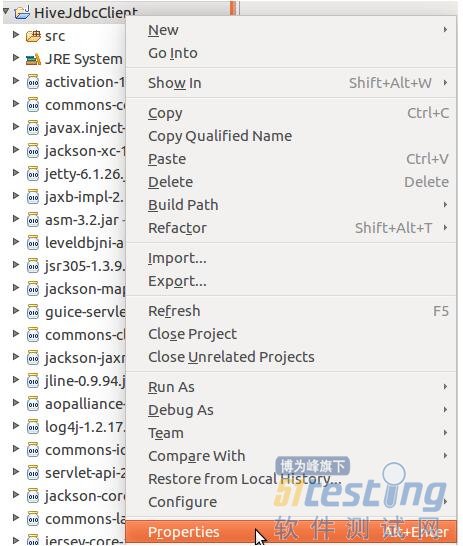

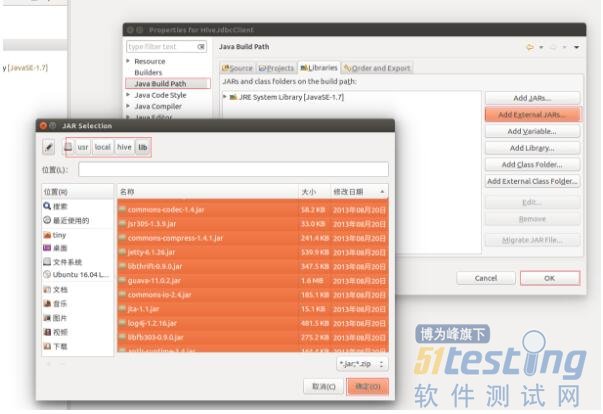

2、右键工程,选择 Properties ,然后在工程中导入外部jar包

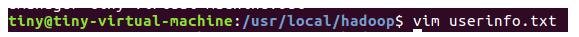

3、创建userinfo.txt文件内容(中间Tab隔开)

1 xiaoping

2 xiaoxue

3 qingqing

4 wangwu

5 zhangsan

6 lisi

4、开启远程服务

hive --service hiveserver

5、JAVA端执行下面代码

import java.sql.Connection; import java.sql.DriverManager; import java.sql.ResultSet; import java.sql.SQLException; import java.sql.Statement; import org.apache.log4j.Logger; public class HiveJdbcClient { private static String driverName = "org.apache.hadoop.hive.jdbc.HiveDriver"; private static String url = "jdbc:hive://localhost:10000/default"; private static String user = ""; private static String password = ""; private static String sql = ""; private static ResultSet res; private static final Logger log = Logger.getLogger(HiveJdbcClient.class); public static void main(String[] args) { try { Class.forName(driverName); Connection conn = DriverManager.getConnection(url, user, password); Statement stmt = conn.createStatement(); String tableName = "testHiveDriverTable"; sql = "drop table " + tableName; stmt.executeQuery(sql); sql = "create table " + tableName + " (key int, value string) row format delimited fields terminated by '\t'"; stmt.executeQuery(sql); sql = "show tables '" + tableName + "'"; System.out.println("Running:" + sql); res = stmt.executeQuery(sql); System.out.println("执行“show tables”运行结果:"); if (res.next()) { System.out.println(res.getString(1)); } sql = "describe " + tableName; System.out.println("Running:" + sql); res = stmt.executeQuery(sql); System.out.println("执行“describe table”运行结果:"); while (res.next()) { System.out.println(res.getString(1) + "\t" + res.getString(2)); } String filepath = "/usr/local/hadoop/userinfo.txt"; sql = "load data local inpath '" + filepath + "' into table " + tableName; System.out.println("Running:" + sql); res = stmt.executeQuery(sql); sql = "select * from " + tableName; System.out.println("Running:" + sql); res = stmt.executeQuery(sql); while (res.next()) { System.out.println(res.getInt(1) + "\t" + res.getString(2)); } sql = "select count(1) from " + tableName; System.out.println("Running:" + sql); res = stmt.executeQuery(sql); System.out.println("执行“regular hive query”运行结果:"); while (res.next()) { System.out.println(res.getString(1)); } conn.close(); conn = null; } catch (ClassNotFoundException e) { e.printStackTrace(); log.error(driverName + " not found!", e); System.exit(1); } catch (SQLException e) { e.printStackTrace(); log.error("Connection error!", e); System.exit(1); } } } |